Practical Mlops Explained

What is MLOps?

Machine learning operations designs around a pipelines for machine learning models.

Normally data science protoype around notebooks, gathering data, cleaning data, and then training a model. The final model is presented to stakeholders for review to see if it meets the requirements.

The problem is the notebooks are not production ready. The incentives around notebooks does not promote good software engineering practices. Unit and integration testing becomes a problem. The whole process is not reproducible.

The basic principal of mlops is to treat machine learning models as software. The model and data are tracked together and monitored so that the models perform similarily to any environment.

Following this guiding principal. The idea is to create a python package that can be deployed to any environment. Making sure the data can be inserted into the model for feature engineering is important

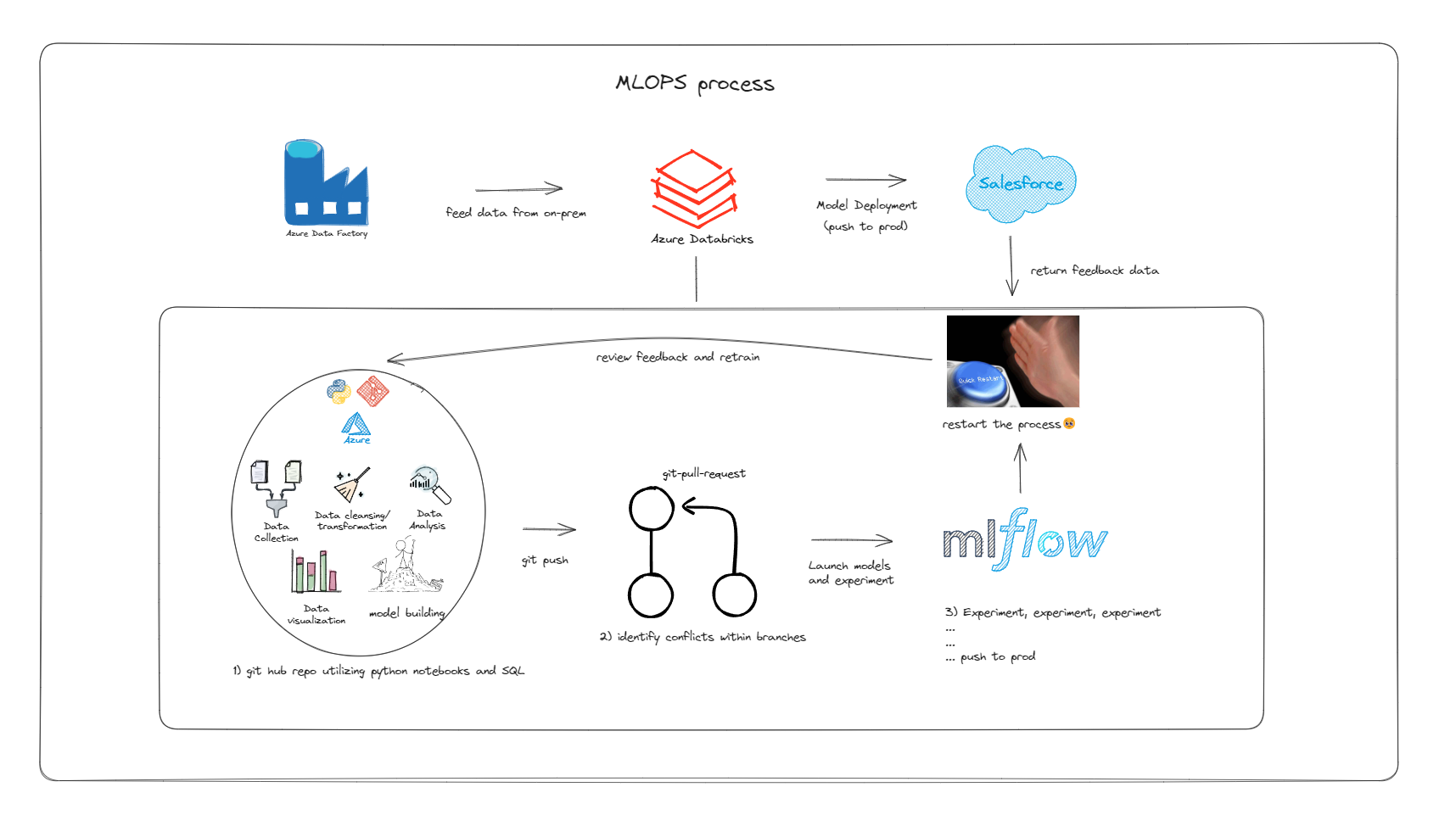

My Simple Design

Following that idea, you layer it with a tech stack. Either Github, Azure devops or Amazon code deploy. It all works the same. Luckily Databricks took care of most platform engineering problem. I can write deployment yml and configs to control where my data flows and individual workflow processes from ETL to model training and batch predictions.

In this setup:

- I designed a python package that does ETL, model training and predictions.

- CI/CD into Databricks for automatic deployment and workflow scheduling.

- Models are stored in MLflow and registered models are ready to be transitioned into different stages.

- Registered production models are deployed for batch predictions.

- Batch prediction tables are shared via SQL warehouse to Salesforce.

- Feedback are later processed and used to retrain the model.

Problems to the design

You really wanna take a look into how different environments are setup if its capable of traversing into different workspaces. If the cloud team is unable to setup security permissions for CI/CD to transact - you got bigger problems mate.

Databricks really takes alot of problems away from you. If you have other setups, you might need to consider how your managing your pyspark clusters, mlflow instance for model versioning, and airflow for orchestration.